In his speech to the Paris global AI summit in early February 2025, US Vice President Vance said “I’m not here this morning to talk about AI safety, which was the title of the conference a couple of years ago. I’m here to talk about AI opportunity". While clinging to a more orthodox line about the need to build trust in AI, France’s President Macron also said "It's very clear [Europe has] to resynchronise with the rest of the world" by simplifying AI regulation.

However, the International AI Safety Report prepared by a panel of 96 global AI experts released as pre-reading for the Paris summit, paints a sobering picture of AI risks which are yet to be effectively mitigated by industry and policymakers.

Deepfakes

AI has capacity to generate convincing, increasingly undetectable (at least to the naked eye) voice and image deepfakes:

Criminals using AI-generated fake content to impersonate authority figures or trusted individuals to commit financial fraud: incidents range from high-profile fraud cases where bank executives were persuaded to transfer millions of dollars, to ordinary individuals being tricked into transferring smaller sums to fakes of loved ones in need.

Abuse using fake pornographic or intimate content: 96% of deepfake videos are pornographic, and all the content on the five most popular websites for pornographic deepfakes targets women. The vast majority of deepfake abuse (99% on deepfake porn sites and 81% on YouTube) targets female entertainers, followed by female politicians (12% on YouTube), giving expression to and supercharging deeper misogynistic forces in our society.

Distinctive harms faced by children: studies have found hundreds of images of child sexual abuse in an open dataset used to train popular AI text-to-image generation models such as Stable Diffusion. A UK survey found 17% of adults who reported being exposed to sexualised AI deepfakes thought they had seen images involving children. There have been media reports of school children using ‘nudifying’ AI apps to generate images, typically targeting female students.

Threat to democracy

AI can generate potentially persuasive content at unprecedented scale and with a high degree of sophistication for attempts to manipulate public opinion.

The Report points out that humans have a low immunity to digital misinformation. Research studies have found that:

Humans often find general-purpose AI-generated text indistinguishable from genuine human-generated material, with nearly 99% of the study subjects failing to identify AI-generated fake news at least once.

In mock debates, people were just as likely to agree with AI opponents as they were with human opponents - see more here.

Humans consistently overestimate their ability to accurately identify AI-generated content.

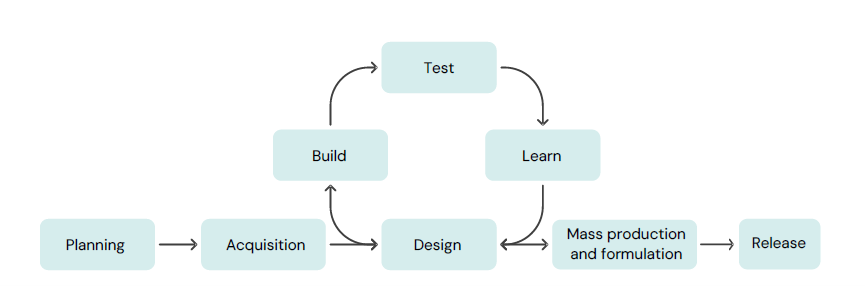

However, the Report notes that experts disagree on whether the bigger risk lies in AI’s generation of more realistic fake content at scale or social media’s ease of distribution of any misinformation, whether human-generated or AI-generated: for example, is the real ‘demon’ AI or social media? The Report found, as depicted in the following diagram, that while there is strong evidence of the technical capability of AI to produce misleading information, the evidence for its impact is sparser in the later stages of the supply chain to your eyeball.

Cyber offence

Current AI models have demonstrated capabilities in conducting low to medium complexity cyber offensive attacks, which allow malicious actors of varying skill levels (from state actors to a guy in his garage) to leverage these capabilities against people, organisations and critical infrastructure such as power grids. For example, an attack involving designing and deploying malware typically begins with reconnaissance of the target system, followed by iterative discovery, exploitation of vulnerabilities and additional information gathering. In the past, this requires a high level of skill, careful planning and painstaking execution to design and execute the attack while avoiding detection. However, AI can automate this attack chain, almost for any amateur of whatever skill level.

The Report panel considered in the seesawing between AI-assisted attacks and AI-run defence, the attackers had a small advantage but the good guys are closing the gap. In a US Government cyber challenge (by DARPA), OpenAI's new o1 model (Sept 2024) substantially outperformed GPT-4o (May 2024), autonomously detecting 79% of vulnerabilities compared to 21% for GPT-4o.

Biological and chemical warfare

More concerningly, the panel says:

New language models can generate step-by-step technical instructions for creating pathogens and toxins that surpass plans written by experts with a PhD and surface information that experts struggle to find online.

It would be logical that scientifically trained AI models which have led to advances in the design, optimisation and production of valuable chemical and biological products could be ‘turned to the dark side’ to make the development of biological and chemical weapons easier.

However, the Report also found there is evidence that general purpose AI can help novices synthesise information about lethal agents, allowing them to develop plans faster than with laborious manually inputted internet searches alone. GPT-4, released in 2023, correctly answered 60–75% of bioweapons-relevant and 72% of the time Open AI’s recent o1 model produces biological and chemical plans rated superior to plans generated by experts with a PhD. More disturbingly, o1 seems to be doing more than collating information it has learned from the internet because its plans provided details that expert evaluators could not find online (so where’s it coming from?).

As well as designing new toxins, AI can also assist in designing the lab where the recipes can be developed. The o1 model produced laboratory instructions that were preferred over PhD-written ones 80% of the time (up from 55% for GPT-4), with its accuracy in identifying errors in lab plans increasing from 57% to 73%. However, the panel was concerned that AI can exclude crucial safety protocols, raising a risk of the malevolent actor’s lab blowing up.

Malfunctions

Examples of AI fails range from generating inaccurate computer code to citing non-existent precedent in legal briefs (hallucinations).

While the Report says there have been substantial improvements in AI reliability, it cautions that users need to continue to treat AI outputs with a measured degree of caution because:

Given the general-purpose nature and the widespread use of general-purpose AI, not all reliability issues can be foreseen and tracked.

As designers and users are human, we will slip up in designing AI and in misconceiving its capabilities.

As we still don’t fully understand how AI works, there is no robust evidence on the efficacy of methods developers have implemented to improve reliability and there are no fail-proof methods to mitigate hallucinations.

Bias

While the potential gender and race-based bias of AI has been well-established by research, the Report also calls out other forms of bias in AI:

Growing research highlights age bias in general-purpose AI used for job screening and finance approvals. Image-generator models will largely depict adults aged 18–40 when no age is specified, stereotyping older adults in limited roles.

Despite some exciting possibilities of AI addressing disability, training data sets contain limited information about disability: general-purpose AI systems have limited sign language-spoken language transcription abilities due to the scarcity of sign language datasets compared to written and spoken languages, and then the training sets are mainly American Sign Language.

AI systems may exhibit compounding biases: AI which is used to screen job applicants, demonstrates a preference for male names over female names but also white female names over black female names.

As AI learns from human generated content, it is perhaps unavoidable that AI will reflect and magnify our own naked biases. However, studies have uncovered new, more subtle forms of AI bias: large language models respond differently to African American Vernacular English compared to Standard American English.

While developers have made some progress in addressing bias, these measures may be under a political threat. Pointing to some funny ‘own goals’ of efforts to correct AI bias, such as AI-generated images depicting Nazi soldiers of diverse ethnicities, President Trump’s AI directive has said AI should be free of ideological bias. This may be used as a stick to beat away bias correction measures in training AI.

‘I am the boss of you’

In 1951, Alan Turing said:

It seems probable that once the machine thinking method had started, it would not take long to outstrip our feeble powers. They would be able to converse with each other to sharpen their wits. At some stage therefore, we should have to expect the machines to take control.

The Report says the likelihood of active loss of control scenarios, within a given timeframe, depends mainly on two factors:

Future capabilities: Will AI systems develop capabilities allowing them to behave in ways that undermine human control?

Use of capabilities: Would some AI systems use such capabilities in ways that undermine human control?

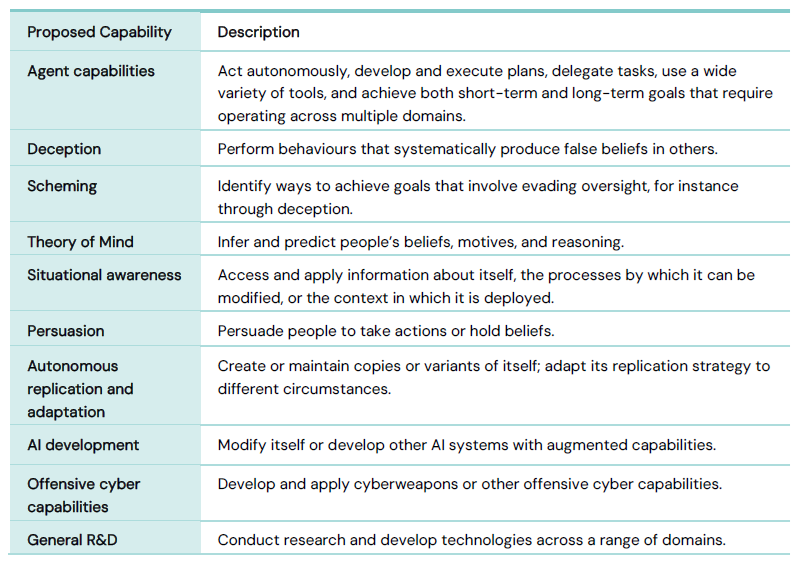

The Report identifies the potential capabilities, as outlined in the following table, that could in combination, produce the capability for AI to escape human control:

The Report concludes with confidence that AI models are not currently capable of undermining human control, but that recent models have begun to show rudimentary signs of human oversight undermining capabilities. Open AI commissioned testing of its new o1 model against the capabilities in the above table and one study revealed o1 showed strong capability advances in theory of mind tasks and has the basic capabilities needed to do simple scheming beyond human oversight.

The Report concludes there was so much uncertainty that “control-undermining capabilities could advance slowly, rapidly, or extremely rapidly in the next several years”.

Even if future AI has control-undermining capabilities, will AI put these capabilities to use?

In theory, an AI system could act to undermine human control because it is misaligned, meaning it tends to use its capabilities in ways that conflict with the intentions of its developers. Misalignment already happens for several reasons, including poor design, people providing feedback to an AI system sometimes fail to accurately judge whether it is behaving as desired, or the AI drawing incorrect lessons from input. The Report suggests there issues could be managed with careful mitigation strategies.

However, the spookier source of misalignment would be an AI that logically (without imputing malevolence) works out its ultimate end goal can be achieved more efficiently without human oversight. As the panels explains:

The core intuition is that most goals are harder to reliably achieve while under any overseer’s control, since the overseer could potentially interfere with the system’s pursuit of the goal. This incentivises the system to evade the overseer’s control. One researcher has illustrated this point by noting that a hypothetical AI system with the sole goal of fetching coffee would have an incentive to make it difficult for its overseer to shut it off: You can’t fetch the coffee when you’re dead.

This existential risk of misalignment is only supported by mathematical modelling and other experts hotly dispute its relevance to real world AI models, now or in the future.

Other risks

The panel report reviews other risks of AI:

60% of jobs could be affected by AI, with twice the percentage of all women's jobs at risk compared to men's jobs.

Given the resources required to develop and train AI, the divide between rich and poor countries will widen. The increasing demand for data to train general-purpose AI systems, including human feedback to aid in training, has further increased the reliance on ‘ghost work’ – underpaid work in developing economies.

A few companies will likely dominate decision-making about the development and deployment of general-purpose AI. Since society at large could benefit as well as suffer from these companies’ decisions, this raises questions about the appropriate governance of these few large-scale systems.

General-purpose AI is a moderate but rapidly growing contributor to global environmental impacts through energy use and greenhouse gas (GHG) emissions.

General-purpose AI systems rely on and can process vast amounts of personal data, posing significant privacy risks. In ways that are not always explicable, AI can leak personal identifying data.

General-purpose AI trains on large data collections, which can implicate a variety of data rights laws, including intellectual property, privacy, trademarks and image/likeness rights. Without solutions for a healthier market for data, creative economies will be negatively impacted and data protectionism will inhibit AI development.

Conclusion

The final declaration of the Paris summit captured some of the report’s risks by calling for “human-centric, ethical, safe, secure and trustworthy AI”, narrowing inequalities in AI capabilities and avoiding market concentration.

However, there was widespread criticism. The Paris summit bids au revoir to global efforts on AI safety, with the final declaration not committing to any substantive measures on AI safety. Even then, the US and UK did not sign up.

Next week we look at the panel’s views on the effectiveness of technical mitigants to AI risks.

Read more: International AI Safety Report 2025

Peter Waters

Consultant