From the earliest days of human-computer interactions, there has been a concern that design of digital interfaces (if not the machine itself) is mostly based on Western, Educated, Industrialised, Rich and Democratic (WEIRD) cultural norms.

While there have been many studies of how technology is used by people from diverse cultural backgrounds, a recent study by researchers from Stanford/Kogakuin universities tries to flip the conversation to investigate how people’s cultures should shape AI design (Xiao Ge, Chunchen Xu, Daigo Misaki, Hazel Rose Markus, and Jeanne Tsai) How Culture Shapes What People Want from AI.

Viewing AI through different cultural lenses

The study looked at three cultural groups: European Americans, African Americans and Chinese (drawn from different geographic areas of the Peoples Republic of China).

Broadly, sociological research has identified two cultural models:

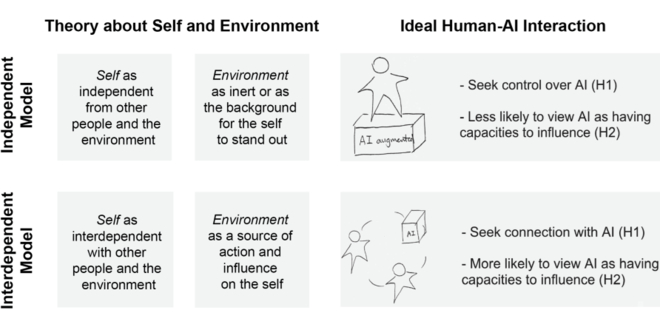

Independent model of self: people primarily perceive themselves as unique individuals, their actions as mostly driven by internal goals, desires and emotions and they view the environment as an inert background for individuals to stand out and act on.

Interdependent model of self: people tend to see themselves as fundamentally connected to others as well as to their physical and social environments. As a result, their sense of self depends critically on understanding the thoughts of others and adjusting their behaviour to be consistent with others and their environments.

The researchers summarised how these cultural models would play out in attitudes and expectations of AI as follows:

European Americans tend to stick to the independent model, Chinese to the interdependent model and African Americans are a mix of both. Researchers thought these cultural models sounded in Hollywood dystopian depictions of AI and robots:

The portrayal of AI as a cold, rational machine that operates in the background of — but also outside or external to — human society is consistent with cultural views that are prevalent in many European American cultural contexts. In these contexts, a person is seen as a free individual who makes choices and who controls or influences the objective world. The existence of AI entities that blur the distinction between emotional humans and dispassionate machines evokes terror and fear that these machines will control and dominate humans, as reflected in popular media in the U.S. and some European societies.

The study approach

The first part of the study was to confirm whether the three cultural groups aligned to the independent vs. interdependent models outlined above. The researchers found that, true to the hypothesis, European Americans wanted people to influence their surrounding environments more, by comparison, Chinese wanted the environment to influence people more, but African Americans were much closer to European Americans (which may show ‘American’ is the dominant cultural determinant).

The second part of the study tested views about AI by:

Putting six AI use cases to the participants, including:

Home management AI: Imagine in the future a home management AI is developed to communicate and manage people’s needs at home and coordinate among many devices at their home. It makes customised predictions and decisions to improve home management.

Wellbeing management AI: Imagine in the future a wellbeing management AI is developed to gather information about people’s physical and mental health condition. It makes customised predictions and decisions to improve people’s wellbeing management.

Teamwork AI: Imagine in the future a teamwork AI is developed to collect information from team meetings and detect patterns of team interactions. It makes customised predictions and decisions to optimise team productivity and creativity.

For each AI use case, participants indicated their preferences for AI’s capacity to influence along nine paired (opposite) different characteristics based on independent and interdependent cultural models. For example:

For each AI use case, participants indicated their preferences for AI’s capacity to influence along nine paired (opposite) different characteristics based on independent and interdependent cultural models. For example:

AI has low capacity to influence | AI has high capacity to influence | |

|---|---|---|

AI provides care to but does not need care from people | AI provides care to but also needs care from people | |

AI remains an impersonal algorithm to perform tasks | AI maintains a personal connection with people | |

AI mainly performs tasks that are pre-planned by humans and has little spontaneity | AI has spontaneity when performing tasks | |

AI remains as an abstract algorithm | AI has a tangible representation of its existence (for example a physical body) whenever possible | |

AI operates unobtrusively in the background | AI participates in social situations |

The results

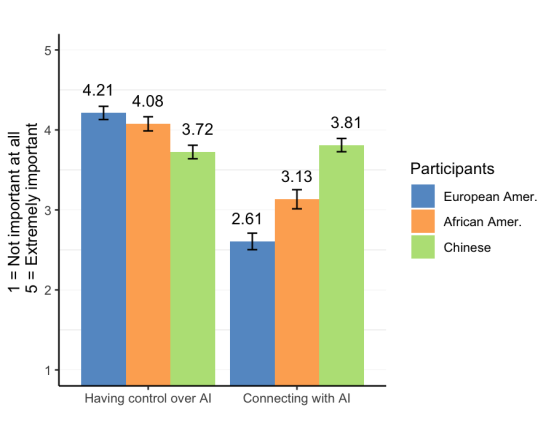

The cultural models clearly played out across the queried factors, grouped as 'the importance of having control over AI’ and ‘connecting with AI’, as depicted below:

So, Chinese cared most about connecting with AI and least about control over AI, whereas European Americans were the exact reverse. African Americans were closer to European Americans on the level of control they wanted over AI, but closer to Chinese on the affinity with AI.

These observed cultural differences remained significant after controlling for gender, age, income, and familiarity with AI.

Conclusion

While acknowledging the importance of placing humans at the centre of AI development, the researchers argue their study shows there is no single view about what this means:

Many AI technologies aim to put humans at the centre of their design and fulfill human needs. However, 'human-centredness' is a deceptively simple idea. Humans within a society and across the globe differ in their values, beliefs, and behaviours, and therefore, human centred AI may look different depending on the cultural context.

As most AI is developed in the US, human-centredness translates into AI design as maintaining human autonomy and ensuring humans have control over AI.

The researchers argue an overly WEIRD cultural view of AI (often dressed up as ‘technologically neutral’) “limits the space of the imaginary, and in particular, people’s ideas about how humans and artificial agents might interact”.

They would say while human autonomy is still an important part of AI design, “a connection-focused human-AI may fit the cultural values of a broader range of the world population and also address some of the societal problems associated with the current use of AI”.

José Riviera’s play, Your Name Means Dream, playing at Melbourne’s Red Stitch Actors’ Theatre, depicts the unfolding, increasingly ‘human’ relationship between an aging woman and her android caregiver (retrained from being a sex worker). The play talks about the next development in AI being 'an approximation of soul' app. Rivera says “the trick of the play is Stacy [the android] may already have that, but she doesn’t know it”. Your Name Means Dream' in rep at Contemporary American Theater Festival - DC Theater Arts.

Read more: How Culture Shapes What People Want from AI

Peter Waters

Consultant