In Philip K. Dick’s novel ‘Do Androids Dream of Electric Sheep? ’ humans need to rely on sophisticated tests to be able to distinguish robots from humans. As AI developers race to make generative AI ever more anthropomorphic, are we entering that world? See more here.

A recent paper by a galaxy of AI experts from Harvard, Oxford, Microsoft, Open AI, Berkley, MIT (among others) proposes a new tool to address the rising tide of AI generated misinformation: personhood credentials (PHCs) that “empower users to demonstrate that they are real people, not Ais, to online services, without disclosing any personal information.” See more here.

The internet's anonymity conundrum

The authors frame the challenge policy makers face in addressing misinformation/disinformation as going to the very core of the internet’s design:

The internet’s pioneers saw anonymity as a fundamental pillar of privacy and freedom of expression. They built its architecture to let people participate in digital spaces without disclosing their real identities. The benefits from this approach are numerous, and worthy of steadfast protection. For example, anonymity allows people in oppressive regimes to express their opinions without fear of retribution. However, these benefits have come at some cost - one such cost is a lack of accountability for deceptive misuse. Malicious actors have long used misleading identities to carry out abuse online. For example, attackers manipulate perceptions of public opinion by spreading disinformation through deceptive 'sock puppet' accounts - appearing to represent distinct people and thus lending their claims more credibility.

While we have seen a rising tide of online misinformation/disinformation, the authors argue “the network remains largely usable for ordinary users” because, on the read side, defences such as spam filters, IP blocklists, firewalls, and vigilant security analysts work tolerably well in detect and mitigate deceptive attacks, and more importantly, on the send side, the attacks themselves are resource-constrained because they have to rely on human labour.

Why AI is different

AI risks blowing through these read and send constraints because:

Sophisticated AI are increasingly capable of breaching technical barriers, and now can readily solve those CAPTCHA tests of reading squiggly letters or solving rotating puzzles. AI can use avatars to spoof identification checks, such as those requiring a selfie with a matching driver’s license. Once through the technical barriers, human readers are presented with AI-powered messages populated with realistic human-like avatars that share high-quality content and that take increasingly autonomous actions: for example, simulating a real-looking person on a video chat making persuasive arguments, tailor persuasion to a specific recipient.

AI is becoming increasingly scalable, both more affordable and accessible. No longer does a malicious actor need a troll factory peopled with low paid workers. No longer is misinformation/disinformation a ‘soft war’ strategy of state actors. Many open weights models are available through user-friendly interfaces, decreasing the technical skill required.

The authors express concern that, confronted with the need to address AI-powered misinformation/disinformation, governments will feel that their only option is to “resort to invasive measures for verifying users’ identities online, overturning the Internet’s longstanding commitment to privacy and civil liberties". Hence the authors’ drive to find an alternative technical approach which addresses human verification while preserving the Internet’s core value of anonymity.

How PHCs would work

The proposal for PHCs relies on two important and current deficits of AI: AI systems cannot convincingly mimic people offline, and AI cannot bypass state-of-the-art cryptographic systems.

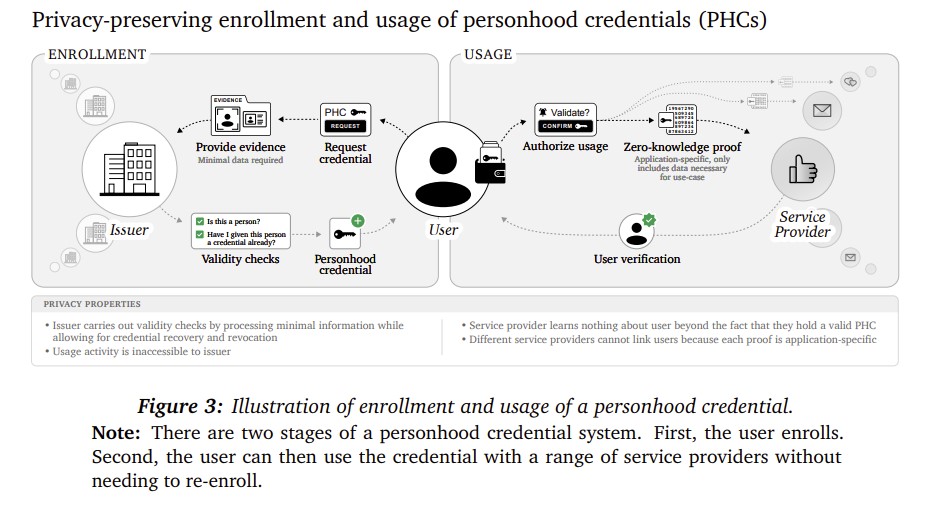

Essentially, PHCs rely on an intermediary (called an issuer) which is the bridge between the offline identity of humans and online services and platforms verifying whether they are dealing with a human. The process is depicted in the following diagram:

The issuer issues a digital credential through a process, referred to as enrolment. This is based on sight and verification of some real world, human-only documentation, such as a driver’s licence or birth certificate. Once a person is enrolled with an issuer, when that person sends a digital message, wants to browse a web site and seeks to log onto a platform, the third-party service provider can request evidence that a user holds a PHC as part of the service provider’s standard authorization process, referred to as usage.

The authors propose PHCs would involve the following rules and safeguards:

There could be more than one issuer. As individual internet users, we would have a choice of which PHC took up. Multiple issuers would help address concerns about concentration of market power and a single government or private enterprise holding a vast store of personal information.

There would be credential limits (one credential per person per issuer) and each issuer would have rules to mitigate the impact of transfer or theft of credentials. While a single user could obtain multiple PHCs by subscribing to more than one issuer, there would be a natural ceiling on the number of PHCs an individual could hold. Each would be tethered to the same human identity, although different documents evidencing that identity might be used or required in each issuer’s enrolment process. Limiting the number of PHCs per end user is seen as reducing the risk of fraudulent.

To mitigate the theft or transfer of credentials, there would be an expiry date on a PHC, and end users would need to reapply for enrolment with the same or a different issuer.

To preserve privacy, a service provider making an ‘is this a human’ inquiry of an issuer learns nothing of the end user other than 'this person has a PHC'. This is called zero-knowledge proofs, and would draw on existing public key cryptography technology, which the authors explain as follows:

PHC issuer could maintain a list of public keys (each related to a valid credential), each of which has a paired secret private key. When a new person successfully enrols in the PHC system, the issuer lets this person add exactly one public key to the list of valid keys — the private key is known only to the user enrolling.

By default, service providers or issuers cannot trace or link usage activity across uses, even if issuers and service providers collude. Service providers do not learn anything when a PHC that has been used with their service is used with another service. An individual service provider may need a method that allows them to check whether a PHC has been used with their service before: for example, when they are offering one-off benefits to first time users. The PHC system can accommodate this without compromising privacy: when a user interacts with a service, they compute a unique nullifier — a number or string — based on their credential and the service’s identity. The service can store these nullifiers to track whether a credential has been used before, without learning any information about the user’s credential, as the service cannot undo the computation to derive the specific credential.

Benefits

The authors identified the following benefits of PHCs in the war on misinformation/disinformation:

Making sock puppetry harder: by boosting or labelling accounts as belonging to an individual (unidentified) human, or by offering users ways to screen out accounts that are not so verified, both AI generated sock puppets and multiple false accounts generated by a single human will be easier to detect.

Bot attacks: botnets involve coordinated groups of entities meant to appear as distinct users: overwhelming a Web service with a number of distinct-appearing users in distributed denial-of-service (DDoS) attacks. By searching for PHCs, the defence systems can restrict each individual to a limited number of accounts/inbound messages.

AI assistants: the next big thing in AI could be personal assistants which have a high degree of autonomy to act on behalf of their end users. The authors say that this could facilitate “a new form of deception: bad actors can deploy AI systems that, instead of pretending to be a person, accurately present as AI agents but pretend to act on behalf of a user who does not exist". Spookily, humans could end up unknowingly being engaged to work for AI. PHCs could do two things: first, could offer a way to verify that AI agents are acting as delegates of real people, signalling credible supervision without revealing the principal’s legal identity; and second, if a human’s personal assistant exercises its autonomy to act in harmful or unacceptable ways, removal of the human’s PHC for the AI could be a way of ensuring end users control their personal assistants.

Disadvantages

While anonymity may have been an original pillar of the Internet, once the Internet became a tool of mass use, convenience and ease of use for end users seems to trump everything, even our privacy concerns. The authors acknowledge that “[a] need for frequent authentication of one’s PHC could contribute to friction and frustration for users”. Put more scathingly (with a touch of paranoia about governments added in) as follows: Wesley Smith, National Review.

Just what we need: more bureaucracy. Besides, why would we trust that system any more than the current one? Governments have proven themselves quite incapable of keeping information private, as have the largest corporations.

The authors candidly acknowledged that while PHCs might solve some problems of misinformation/disinformation, it could create distortions and disincentives for public discourse:

It is important to consider how PHCs could reduce people’s willingness to speak freely and to dissent in digital spaces. Individuals may fear that their online speech will be linked to their offline identity, even though PHCs do not convey one’s identity. Individuals who choose not to verify their personhood through PHCs might find their contributions discounted or even labelled as disinformation. On the other hand, statements that might previously have been dismissed due to doubts about their origin could earn credibility when accompanied by PHC verification.

The authors acknowledged that, given the personal information which the issuer would need to sight and verify to issue the PHC, there would be substantial privacy issues involved. For example (which not endorsed by the authors), Worldcoin (backed by Sam Altman of Open AI) proposes to use a person’s iris to identify them as human: according to the company, the iris is the only biometric measure that’s sufficiently unique to identify all the humans on the planet. See more here.

The authors also identified the need for standardisation and even interoperability between individual PHC systems. If there are multiple issues, it would be cumbersome for digital service providers if they had to build standalone recognition systems to read each type of PHC, and inconvenient for users if they had to hold multiple PHCs from different users to be able to access web sites and digital platforms.

The authors suggested one approach might be to set up a global consortium of participating organisations and governments, following a multi-stakeholder governance model, along the lines of An Internet Corporation for Assigned Names and Numbers (ICANN).

Conclusion

In the film Blade Runner, the main character passes the Voight-Kampff test, but the final image of the origami unicorn left outside his apartment suggests that, as his dreams are known, he may be a replicant after all. Hence the fragility of the distinction between human and machine, or at least tests online of that difference.

Read more here: Personhood credentials: Artificial intelligence and the value of privacy-preserving tools to distinguish who is real online

Peter Waters

Consultant