The United States’ Cybersecurity and Infrastructure Security Agency (CISA) recently released guidelines, the “Safety and Security Guidelines for Critical Infrastructure Owners and Operators” (the CISA Guidelines) to assist critical infrastructure owners and operators mitigate AI risk.

The CISA Guidelines add to the steadily increasing volume of international soft law guidance on the use of AI, which has seen widespread cooperation among countries as they grapple with rapid AI developments and widespread rollouts of new AI technologies.

What marks out the CISA Guidelines is that they are among the first to focus on AI in a critical infrastructure context and their release is timely in the context of current international efforts to regulate AI and the swift incorporation of AI in critical infrastructure systems, for example health care (diagnosis and predicting patient outcomes), transportation (self-driving vehicles) and finance (detecting fraud).

Why is this relevant in Australia

Critical infrastructure risks have taken the spotlight in Australia, and many other countries in recent years, as seen through the government’s numerous amendments to bolster and modernise Australia’s core critical infrastructure legislation, the Security of Critical Infrastructure Act 2018 (Cth) (SOCI Act). The Australian Government is currently considering further legislative reforms to the SOCI Act, as well as ransomware, data retention and cyber incident response, to strengthen protections for critical infrastructure as part of its goal of making Australia a world leader in cyber security by 2030 under its 2023-2030 Australian Cyber Security Strategy (the Strategy).

The use of AI to protect critical national infrastructure unsurprisingly appears to form part of the Strategy. For example, in August 2024, Australia’s national science agency, the Commonwealth Scientific and Industrial Research Organisation (CSIRO), announced a research partnership with Google aimed at creating new AI-powered technologies to enhance software security in line with the Strategy.

The partnership seeks to develop AI tools for automated vulnerability scanners and data protections to assist critical infrastructure owners to quickly and accurately identify potential security vulnerabilities in their software supply chains. The partnership will also focus on designing a secure framework that offers clear guidance to critical infrastructure operators about current and future security requirements, which are regulated under the SOCI Act.

For further information on the 2023-2030 Australian Cyber Security Strategy and the development of and reforms to the SOCI Act, see our previous articles:

The curtain falls - Final reforms to Australia’s critical infrastructure laws

SOCI Critical infrastructure risk management program Rules now registered .

Owners, operators, and direct interest holders in critical infrastructure assets located in Australia are currently subject to a suite of obligations under the SOCI Act. This includes the requirement to establish, maintain, and comply with a critical infrastructure risk management program (CIRMP). The CIRMP is designed to manage the 'material risk' of hazards that could impact their critical infrastructure assets. Entities subject to the CIRMP obligation must identify and, as far as reasonably practicable, take steps to minimize or eliminate these material risks.

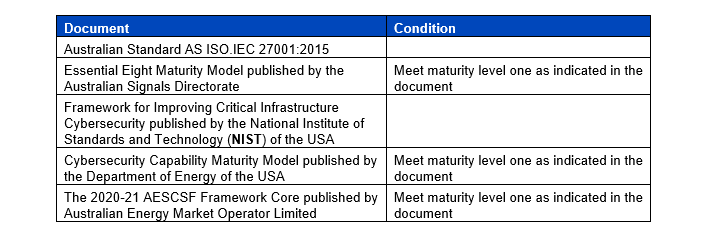

There are specific CIRMP obligations relating to protections within four key hazard vectors and one of these hazard vectors is cyber and information security. For example, cyber risks to digital systems, computers, datasets, and networks that underpin critical infrastructure systems, which includes improper access, misuse, or unauthorised control. An entity’s CIRMP must describe the cyber and information security hazards that could have a relevant impact on their critical infrastructure asset (for example, phishing, malware, credential harvesting), mitigations, and comply with one of the following cyber frameworks:

The NIST ‘Framework for Improving Critical Infrastructure Cybersecurity’ is one of the more widely used cyber frameworks and was updated this year to expand the framework’s applicability and include new guidance on cybersecurity governance and continuous improvement practices. This updated version has been renamed the NIST Cybersecurity Framework and refers to risks from emerging technologies, including AI.

The NIST Cybersecurity Framework relevantly notes that NIST’s 2023 Artificial Intelligence Risk Management Framework (AI RMF) was developed to help address AI risks, namely to improve the ability to incorporate trustworthiness considerations into the design, development, use, and evaluation of AI products, services, and systems. The Guidelines themselves are aligned to the NIST AI RMP, as described in further detail below.

Given the rapid growth and risks associated with AI, as distinct from, or a subset of, cyber risk, it is possible a requirement to comply with the NIST AI RMF, or other equivalent international AI frameworks that have been or are developed, will become part of the CIRMP requirements or obligations to manage AI risks will otherwise be included in future reforms to the SOCI Act. Further, those who own or operate critical infrastructure in Australia and already, or intend to, use AI in their critical infrastructure systems should take note of the Guidelines, which contain useful insights that are not US-specific and can be applied to any critical infrastructure systems, some of which are outlined below.

Why AI presents a new and significant risk to critical infrastructure

Before delving into key takeaways from the Guidelines, it is worth stepping through the specific nature of AI and resultant risks which necessitate guidance such as the Guidelines and explain widespread societal concerns. While the Guidelines address AI risks to safety and security which are uniquely consequential to critical infrastructure, they go into limited detail on the features of AI which create greater risks distinct from cyber risks to critical infrastructure systems.NIST defines AI as “[a] branch of computer science devoted to developing data processing systems that performs functions normally associated with human intelligence, such as reasoning, learning, and self-improvement”.

Notwithstanding that no universally accepted classification of AI exists, NIST’s definition of AI highlights the inherently transformative nature of AI, which can also be characterised as a semi-autonomous shapeshifter based on its ability to create its own data sets, write code and predict and resolve problems. In a critical infrastructure context, AI can involve activities such as pattern matching, decision making and anomaly detection where real-time analysis is combined with the critical infrastructure system to continually adapt to changing circumstances.

These AI activities involve running the core components of a critical infrastructure network, such that any failures in high-risk critical infrastructure AI functions may lead to catastrophic malfunctions to critical infrastructure systems. This is distinct from ‘typical’ cyber threats today, where lines of intrusive or ‘broken’ code can be identified and fixed once; on the other hand, a malicious AI system could identify systems gaps and continuously generate new lines of intrusive code.

In the context of AI systems being able to update their data sets more quickly and more often than a person, the extreme risks of AI to critical infrastructure systems are clear. Notwithstanding this, the benefits of AI in critical infrastructure systems present endless beneficial uses, some of which are already being realised. For example, predictive analysis to proactively detect potential component failures, post failure analysis, performance operation, and fraud and intrusion prevention and detection. Balancing the safety and potential evil uses of AI in critical infrastructure systems with fostering innovation and promoting opportunities to improve the reliability, evolution and performance of critical systems will continue to generate society wide discussion and regulation, as well as soft law guidance such as the Guidelines.

Key takeaways from the Guidelines

The CISA Guidelines start with the obvious common-sense point that “[a]ny effort to mitigate risk should start with an assessment of those risks”. Before leaping into a discussion of the specific risks, the CISA Guidelines highlight the dual nature of AI insofar that it presents transformative opportunities and solutions for many critical infrastructure functions arising from AI and can also be misused or weaponised such that critical infrastructure systems become vulnerable to critical failures, physical attacks, and cyber attacks.

While a refreshing approach departure from the usual doom and gloom messaging of cyber-risk guidelines, the CISA Guidelines neatly illustrate the unique risk management challenge of the inherently shapeshifting nature of AI: the bad is the flipside of the good of AI.

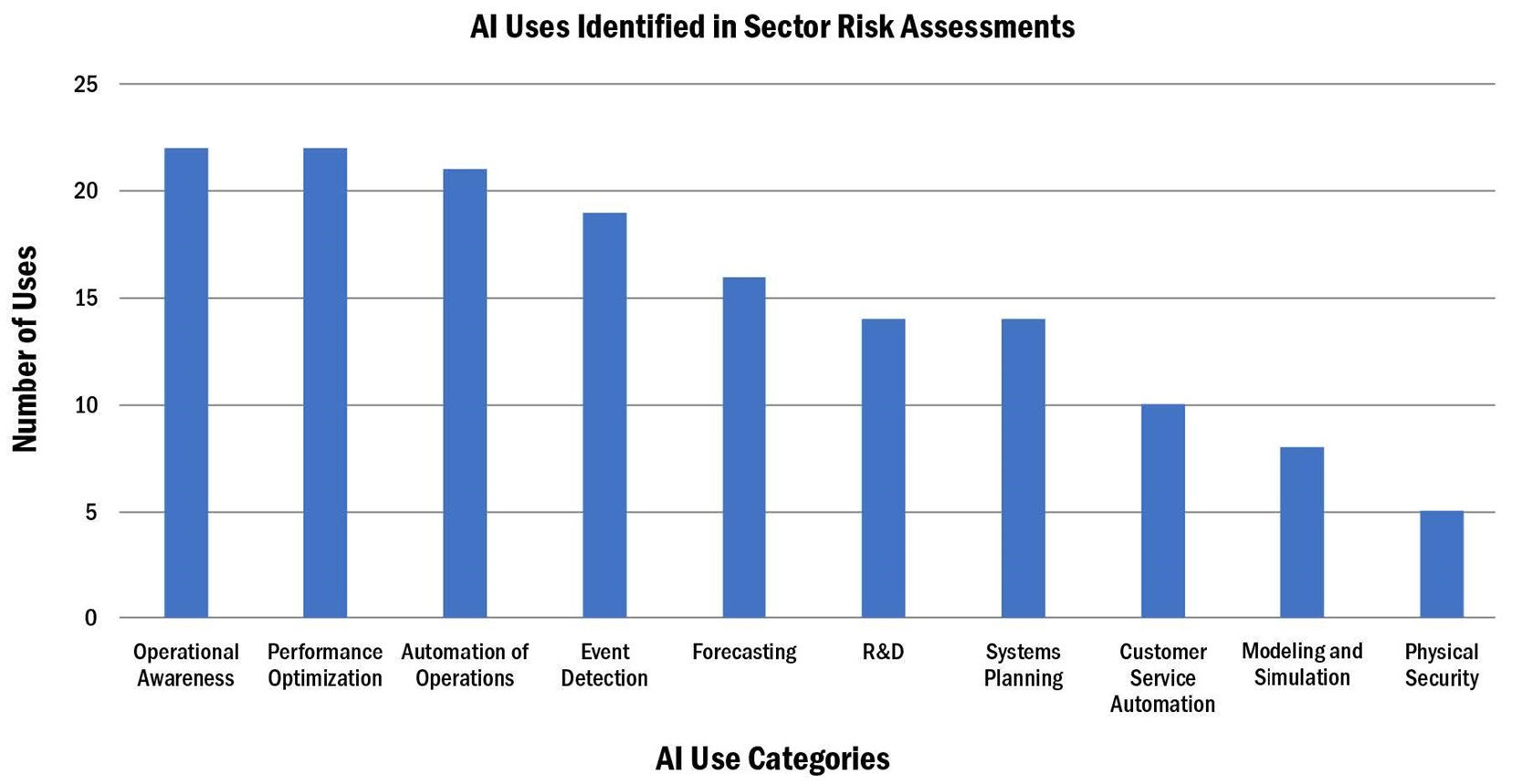

The CISA Guidelines helpfully distil more than 150 beneficial uses of AI, as identified in sector-specific AI risk assessments undertaken by the federal US agencies designated to the US’s critical infrastructure sectors, into 10 AI use categories, with such categorisation intended to ease interpretation and discussion of AI uses. These 10 categories are shown in Figure 1 below.

Figure 1: Prevalence of the types of AI uses

The top four AI use categories are:

Operational Awareness: involves using AI to gain a clearer understanding of an organisation’s operations. For example, using AI to monitor network traffic and identify unusual activity, enhancing cybersecurity.

Performance Optimisation: involves using AI to improve the efficiency and effectiveness of processes or systems. For example, using AI to optimise supply chain operations, reducing costs, and improving delivery times.

Automation of Operations: refers to using AI to automate routine tasks and processes in an organisation, such as data entry or report generation. For example, using AI to automate the process of sorting and analysing large amounts of data.

Event Detection: refers to the use of AI to detect specific events or changes in a system or environment. For example, the use of AI in health monitoring systems to detect abnormal heart rates.

Each of these top four AI use categories can be characterised as core components of critical infrastructure networks. As described in the section above on the characterisation of AI as a shapeshifter, the use of AI in central components of critical infrastructure systems needs to be carefully considered prior to implementation and during usage to mitigate the potentially catastrophic risks.

Turning to the bad, the CISA Guidelines categorise AI risks in a critical infrastructure context into the following three distinct categories of system-level risk:

1. Attacks Using AI: The use of AI to plan or execute physical or cyber attacks on critical infrastructure. The risks to be managed by critical infrastructure operators include:

Using AI to augment threat actor capabilities and operations: for example, autonomous malware, automatic parsing of text for vulnerability insights, modelling and model inference and completion, cyberattack detection evasion, machine-speed decision-making, prompt injections to reveal sensitive information).

Using AI-enabled psychological manipulation to trick users into revealing sensitive information or performing actions that compromise security controls, including the use of deepfakes or AI enhanced phishing attempts.

Using AI-enabled attacks, such as cyber compromises, physical attacks, and social engineering to target and disrupt vulnerable logistics supply chains for critical materials.

Using AI systems to collect and interpret public data to reverse engineer intellectual property or other sensitive information.

2. Attacks Targeting AI Systems: targeted attacks on AI systems supporting critical infrastructure. The risks to be managed by critical infrastructure operators include:

Intentionally modifying algorithms, data, or sensors to cause AI systems to behave in a way that is harmful to the infrastructure they serve.

Malicious attempts to steal the training data or parameters of a model, or reverse engineer the functionality of a model.

Attacks to render an AI system unavailable to its intended users, either directly (disrupting the AI system itself) or indirectly disrupting the availability of necessary data or computing resources).

3. Failures in AI Design and Implementation: deficiencies or inadequacies in AI systems leading to malfunctions or unintended consequences. The risks to be managed by critical infrastructure operators include:

Malfunction or unexpected behaviour facilitated by excessive permissions or poorly defined operational parameters for AI systems.

A shortage of personnel trained in the design, integration, training, management, and interpretation of AI systems could result in inappropriate selection, installation, and use of AI systems.

Human operators’ excessive reliance on an AI system’s ability to make decisions or perform tasks, potentially resulting in operational disruptions if the AI system fails.

Third party vendors could use AI products that depend on data sets that have not been validated or other external factors that could lead to malfunction or operational disruption.

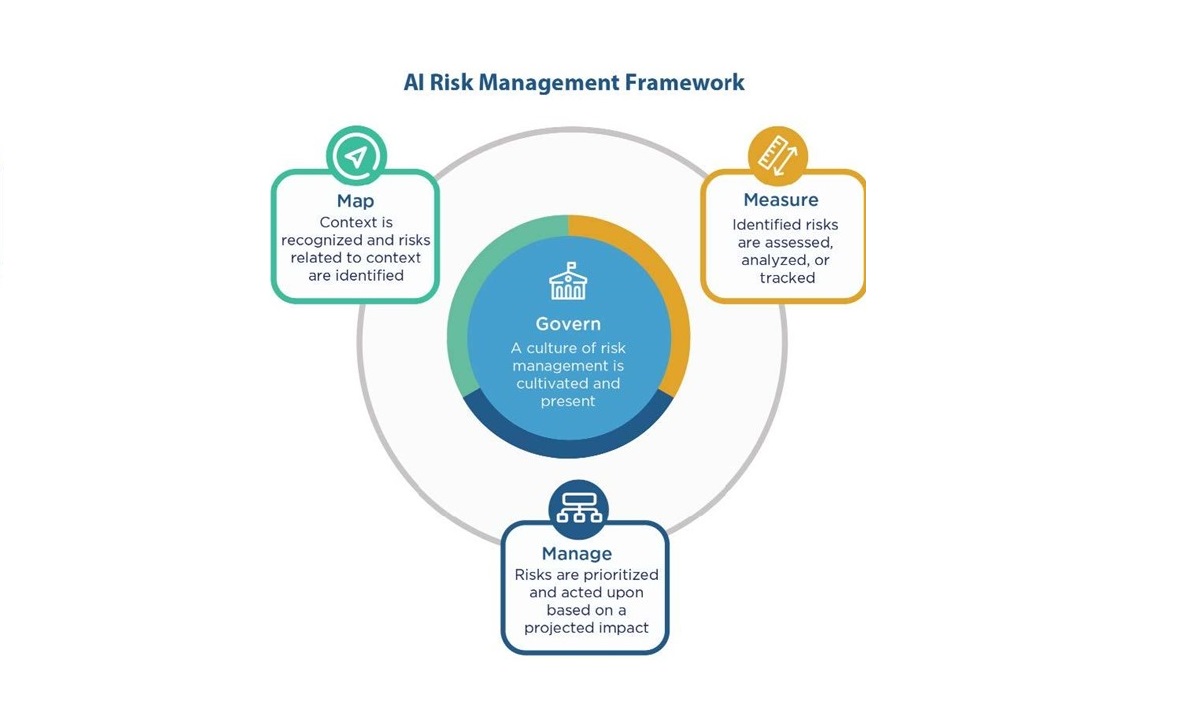

The CISA Guidelines build upon the NIST AI RMP and are intended to foster efforts to integrate the AI RMF into critical infrastructure enterprise risk management programs by directing critical infrastructure owners and operators to address the risks of AI systems using the following four AI RMP functions: Govern, Map, Measure, and Manage. See Figure 2 below:

Figure 1: NIST AI Risk Management Framework

Further details on these four elements are as follows:

1. Govern: Establish an organisational culture of AI risk management. The aim is to prioritise and take ownership of safety and security outcomes, embrace radical transparency and build organisational structures that make security a top business priority. This includes detailing plans for cybersecurity risk management, establishing secure by design practices, and establishing roles and responsibilities with AI vendors for the safe and secure operation of AI systems in critical infrastructure contexts. Specific suggested measures include:

Establish roles and responsibilities with AI vendors for the safe and secure operation of AI systems in critical infrastructure contexts. This includes documented plans and regular communication regarding the integration testing, data, input, model and functional validation, and continuous maintenance and monitoring of the AI systems.

Integrate AI threats, AI incidents, and AI system failures into all information-sharing mechanisms for relevant internal and external stakeholders.

Invest in workforce development and subject matter expertise that establish and sustain a skilled, vetted, and diverse AI workforce, i.e. labour cost saving should come way down your list of goals.

2. Map: Understand your individual AI use context and risk profile. The aim is to establish and understand the foundational context from which owners and operational of critical infrastructure can evaluate and mitigate AI risks. This can be achieved through creating an inventory of all current or proposed AI use cases, requiring AI impact assessments as part of the deployment or acquisition process for AI systems, and reviewing AI vendor supply chains for security and safety risks. Specific measures include:

Establish which AI systems in critical infrastructure operations should be subject to human supervision and human control of decision-making to address malfunctions or unintended consequences that could affect safety or operations.

Review AI vendor supply chains for security and safety risks. This review should include vendor-provided hardware, software, and infrastructure to develop and host an AI system and, where possible, should incorporate vendor risk assessments and documents, such as software bills of materials (SBOMs), AI system bills of materials (AIBOMs), data cards, and model cards.

Gain and maintain awareness of new failure states and alternate process redundancy because of incorporating AI systems in safety and incident response processes.

3. Measure: Develop systems to assess, analyse, and track AI risks. The aim is to identify repeatable methods and metrics for measuring and monitoring AI risks and impacts throughout the AI system lifestyle. This includes defining metrics and approaches for detecting, tracking and measuring known risks, errors, incidents or negative impacts and continuously testing AI systems for errors or vulnerabilities. Specific measures include:

Evaluate AI vendors and AI vendor systems from a safety and security standpoint, assessing key areas of concern, such as data drift or model drift, vendor AI expertise, and ongoing operation and maintenance.

Build AI systems with resilience in mind, enabling quick recovery from disruptions and maintaining functionality under adverse conditions during the deployment phase.

Establish processes for reporting AI safety and security information to, and receiving feedback from, potentially impacted communities and stakeholders: i.e. don’t hold the ‘bad news’ close to your corporate chest, but sharing information about risks helps build up a wider shared knowledge base.

4. Manage: Prioritise and act upon AI risks to safety and security. The aim is to implement and maintain identified risk management controls to maximise the benefits of AI systems while decreasing the likelihood of harmful safety and security impacts. This can be achieved by following cybersecurity best practices, implementing new or strengthened mitigation strategies, and applying appropriate security controls. Specific measures include:

Apply mitigations prior to deployment of an AI vendor’s systems to manage identified safety and security risks and to address existing vulnerabilities, where possible.

Implement tools, such as watermarks, content labels, and authentication techniques, to assist the public in identifying AI-generated content.

Conclusion

While the CISA Guidelines are not rocket science, they serve as a useful reference point for critical infrastructure owners and operators who have implemented, or are intending to implement, AI into their critical infrastructure systems, although they naturally need to be adapted to each organisation, as stated in the Guidelines themselves:

Although these guidelines are broad enough to apply to all 16 critical infrastructure sectors, AI risks are highly contextual. Therefore, critical infrastructure owners and operators should consider these guidelines within their own specific, real-world circumstances.

Applying the CISA Guidelines to generative AI will likely prove to be a complex task given how quickly AI developments are moving and the complexity of their integrations with critical infrastructure systems.

The central dilemma for critical infrastructure owners and operators is striking the right balance between fostering innovation as well as making use of the enormous benefits AI offers and risk management given the potentially devastating consequences and vulnerabilities AI technologies could introduce to their operations.

The CISA Guidelines really don’t crack this nut. The guidelines lack concrete technical guidance for critical infrastructure operators that can be put into practice. The U.S. Department of Homeland Security has flagged that they are considering developing additional resources, including playbooks, to assist with the implementation of the Guidelines based on evolving AI technologies, updates to the standards landscape (including the NIST AI RMF), and input from critical infrastructure risk assessments and stakeholders.

Read more: Safety and Security Guidelines for Critical Infrastructure Owners and Operators

Peter Waters

Consultant